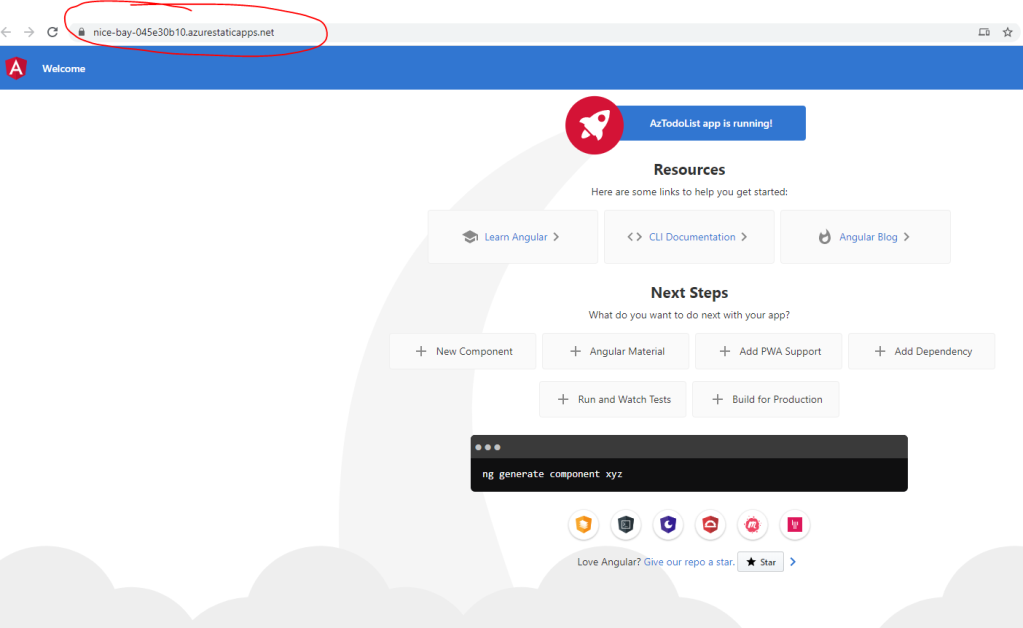

In this series about Azure static web apps, we created an Angular Todo list app, an Azure function using JavaScript and linked both together. If you want to have a look at the previous parts, here are the links

- https://haithamshaddad.com/2020/05/28/create-an-angular-todo-list-with-azure-static-web-apps-part-1/

- https://haithamshaddad.com/2020/06/05/create-an-angular-todo-list-with-azure-static-web-apps-part-2/

- https://haithamshaddad.com/2020/06/06/create-an-angular-todo-list-with-azure-static-web-apps-part-3/

In this post, we will store the todos in Cosmos DB and read it from our Azure function.

Create Cosmos DB

Azure Cosmos DB gives you a free account with upto 400 RU/s. You can use it for this demo. Go to Azure Portal, Click New Resource -> Under Databases select Azure Cosmos DB, choose the API as SQL API and add the account name, resource group and region and leave everything else with the default value then click Review + Create then Create.

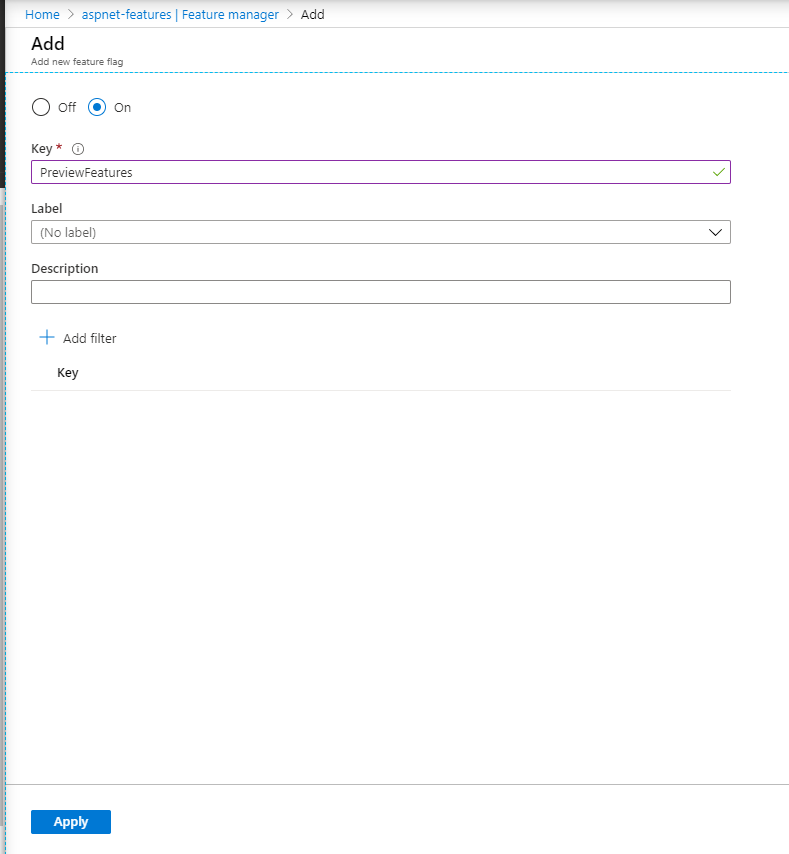

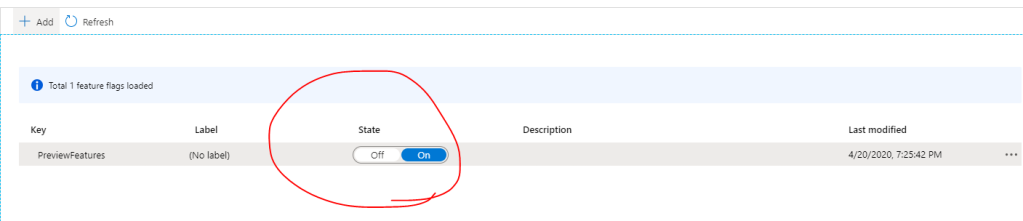

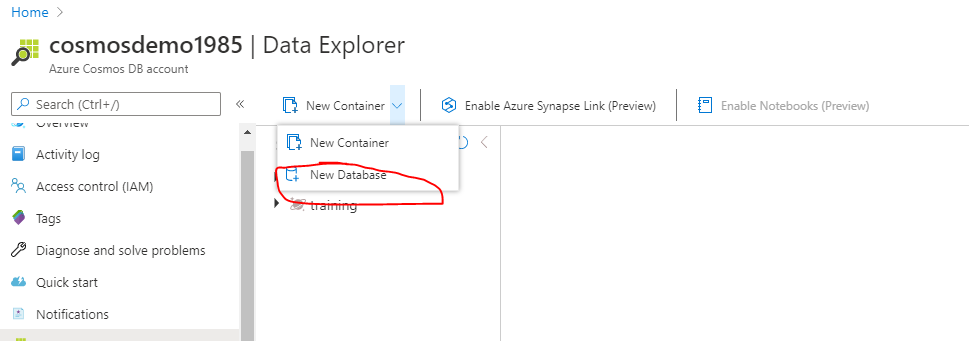

Once the Azure Cosmos DB account is created, open it and go to Data Explorer and from the toolbar click New Database and give it a name

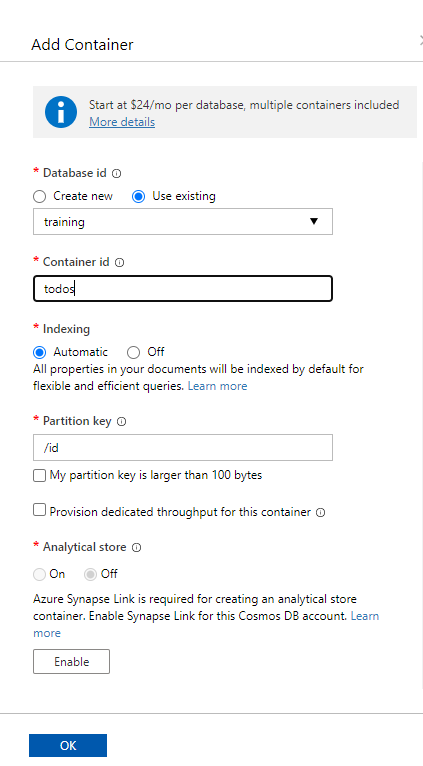

Once the database is created, click the three dots near the database name and click New Container. Provide a name for the container and type /id as the partition key then click ok

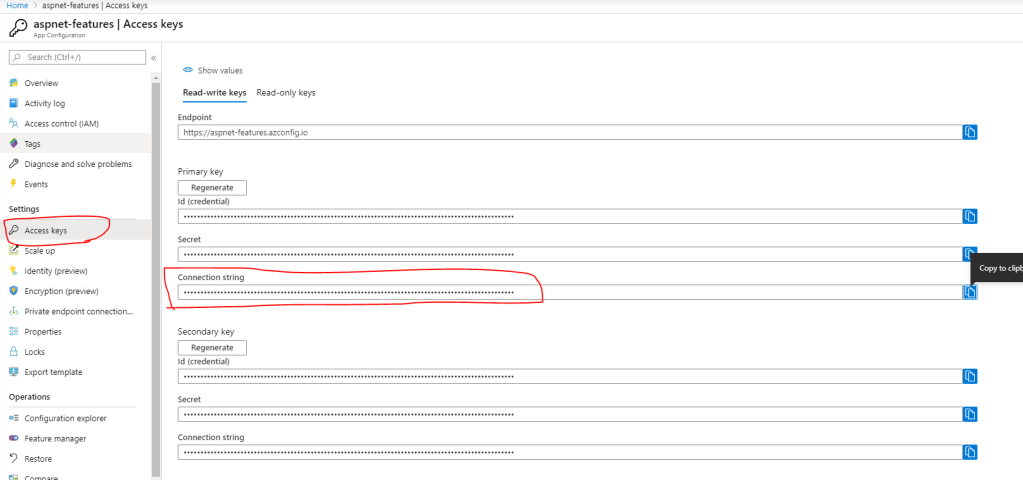

From the left menu click Keys and copy the PRIMARY CONNECTION STRING.

Azure Function Settings

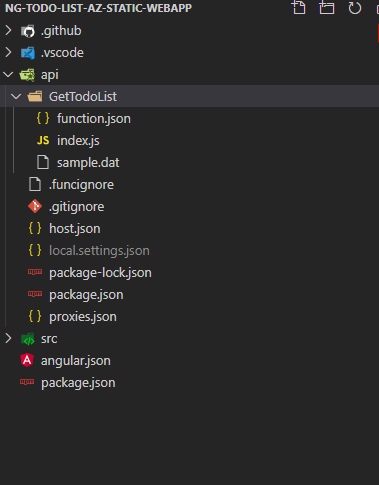

To connect to the Azure Cosmos DB, we we will need to store the connection string somewhere. For this purpose, we will use the file local.settings.json under api folder.

Add a new key under the values key and name it CosmosConnectionString and paste the Cosmos DB connection string copied earlier.

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "",

"FUNCTIONS_WORKER_RUNTIME": "node",

"CosmosConnectionString":""

},"Host": {

"CORS": "*"

}

}

The local.settings.json file is not added to Git and should not be. This should include the connection strings and any secrets used locally.

Connect to Cosmos from the APIs

We have the database ready now and the connection string is set in our api. To read data from Cosmos, we need to install the package @azure/cosmos. Open command prompt and navigate to the api folder that has the function project and run the following command

npm i @azure/cosmos

Now open the index.js file inside the api/GetTodoList folder and paste the following code

const CosmosClient = require('@azure/cosmos').CosmosClient;

module.exports = async function (context, req) {

context.log('JavaScript HTTP trigger function processed a request.');

let cosmosClient = new CosmosClient(process.env['CosmosConnectionString']);

let database = cosmosClient.database('todos');

let container = database.container('todolist');

let {resources} = (await container.items.query('SELECT c.id,c.title,c.isCompleted FROM c').fetchAll());

context.res = {

// status: 200, /* Defaults to 200 */

body: resources

};

}

The code is straight forward, in line 1, we imported the CosmosClient class which will be used to connect to Cosmos DB. This is like an EntityFramework DBContext. Line 5,6, and 7 we create a client instance, use it to get a reference to the database and the container that has our data.

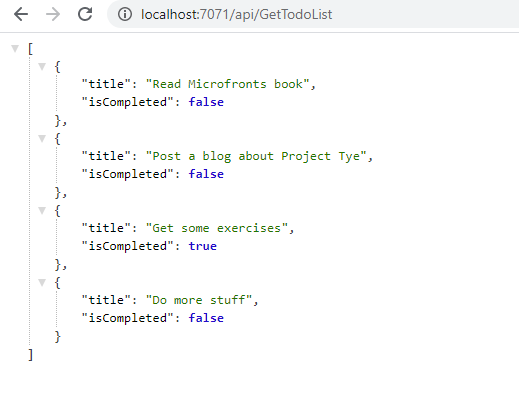

In line 8, we query the container to return only the id, title and isCompleted fields and return the results.

You can see that the field names are now pascal named (title instead of Title), you will have to update the binding in the TodoList component

<td><input type="checkbox" [checked]="todo.isCompleted" disabled></td>

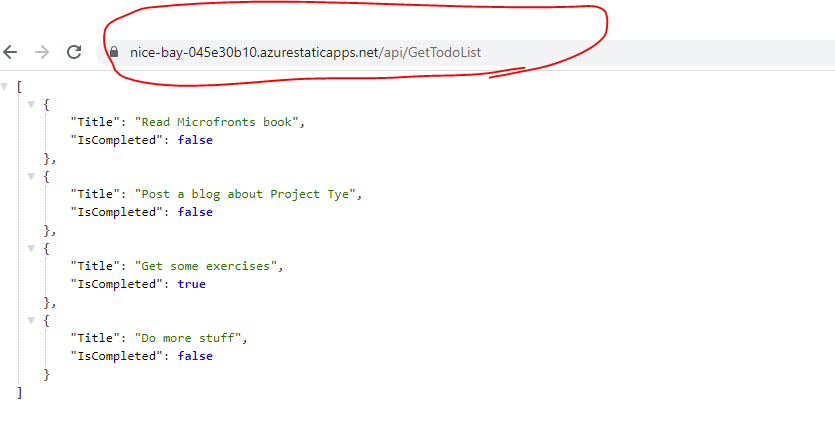

Test

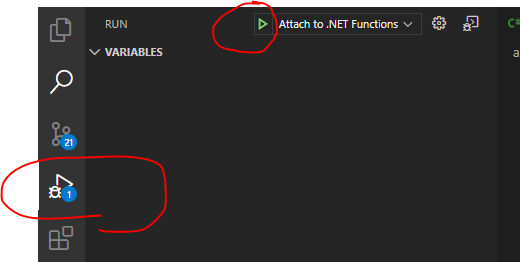

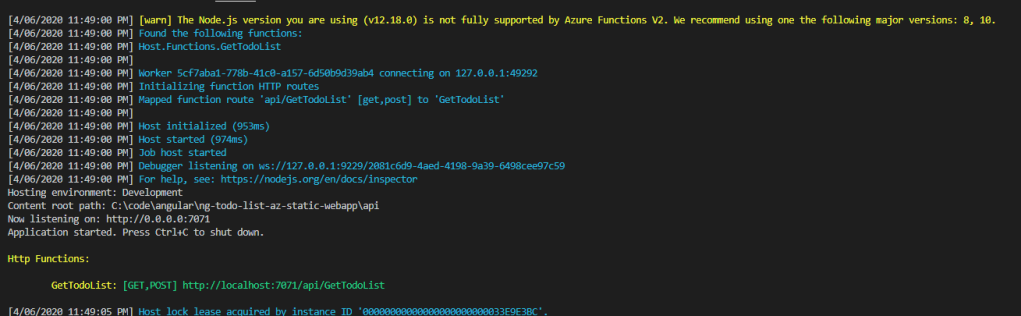

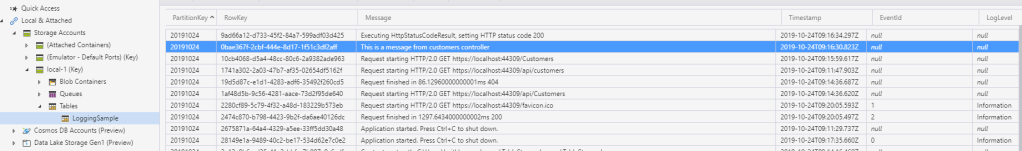

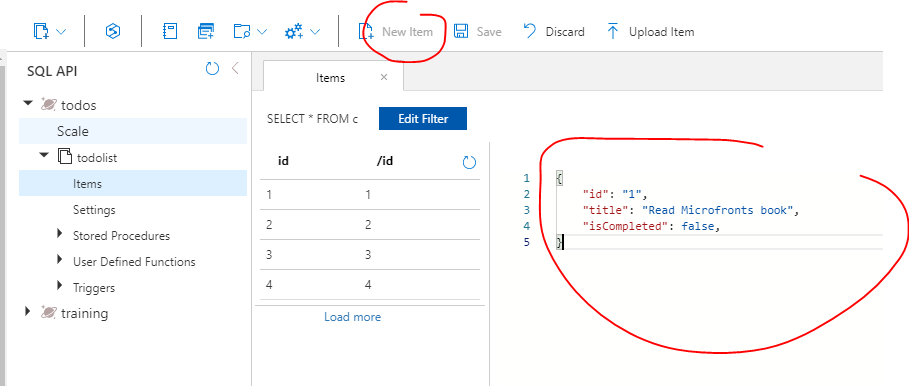

Run your api project with func start and your Angular app with ng serve. You should see an empty list. Go back to your Cosmos DB instance in Azure Portal, click Data Explorer, select the db then the container and choose Items. You can add a new item by clicking the New Item button on the toolbar and then click Save. Repeat the process to add few items

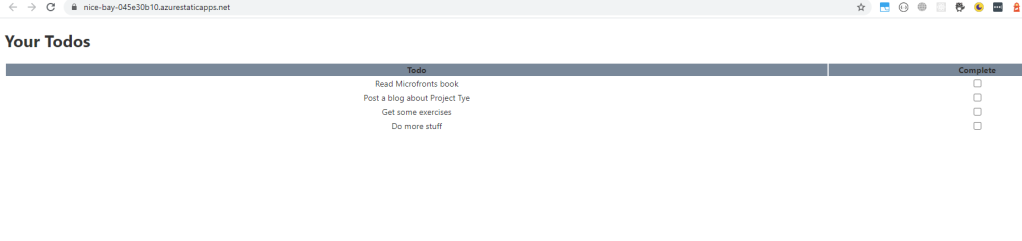

Now try to refresh your app and you should see the data displayed in the Angular app

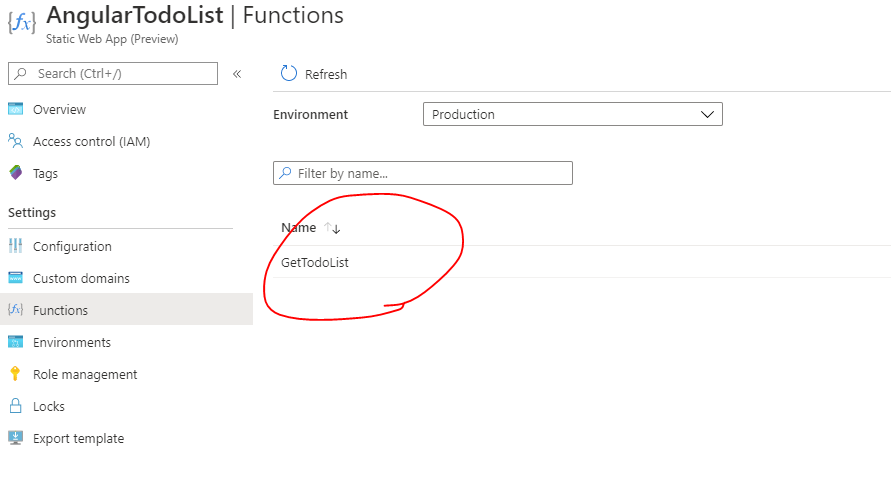

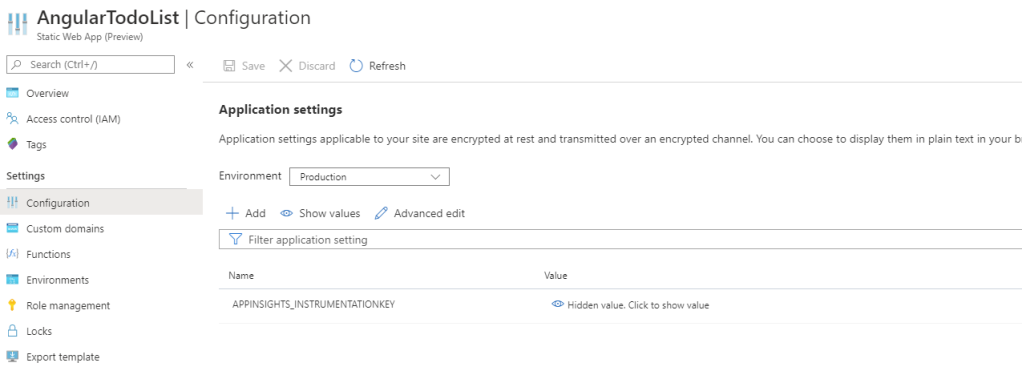

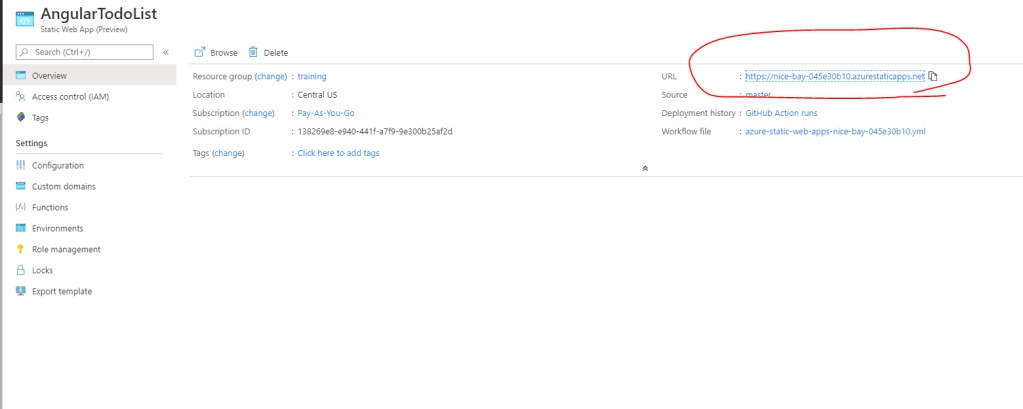

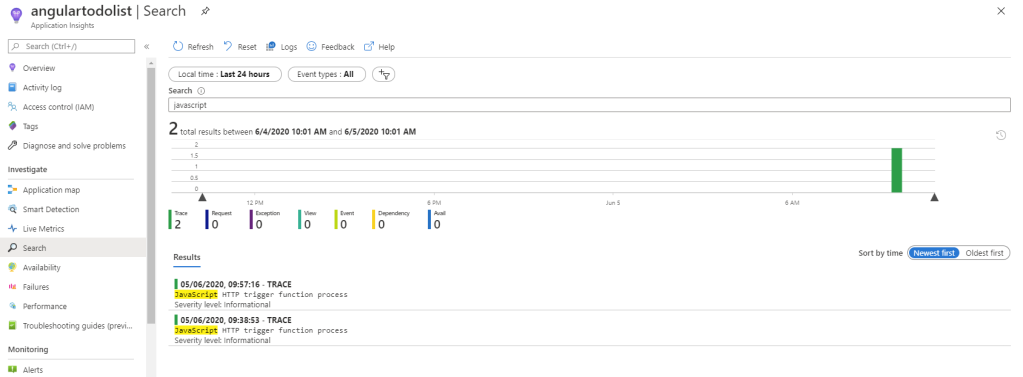

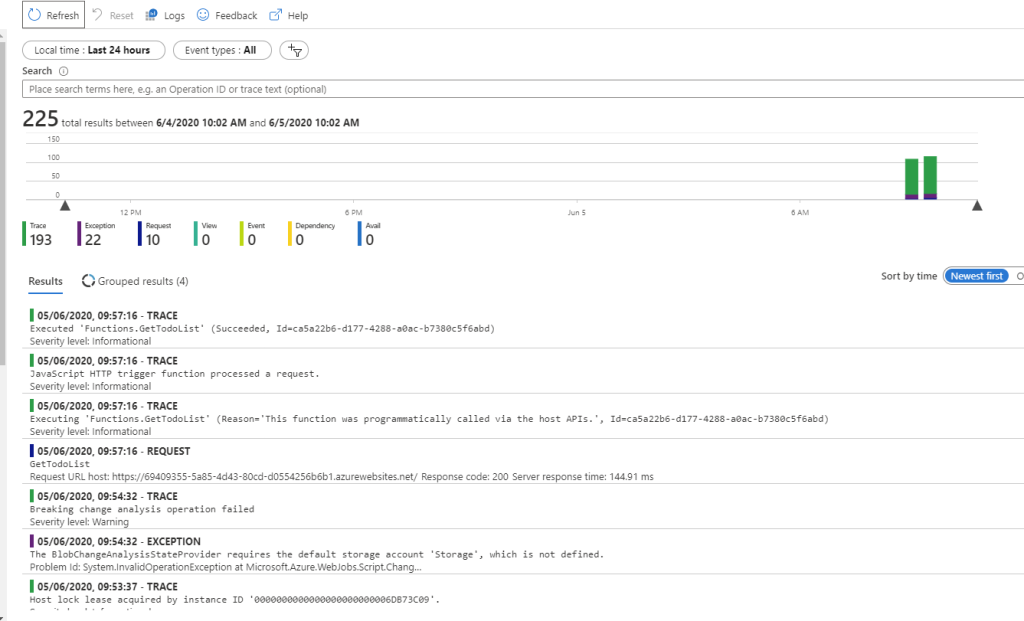

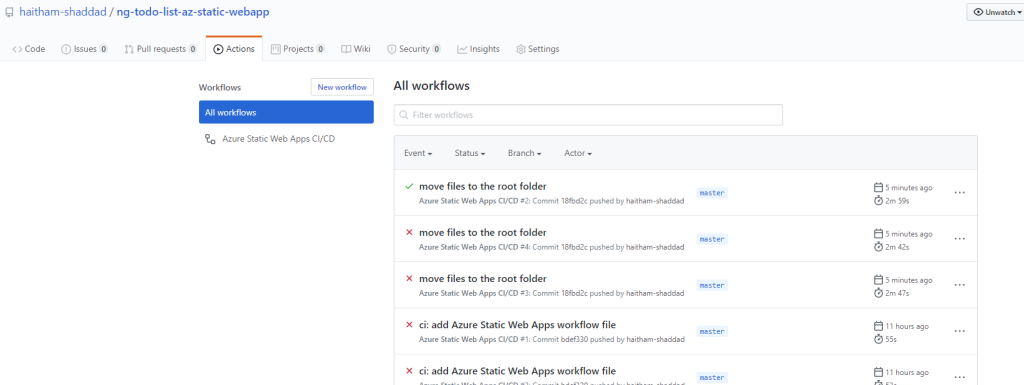

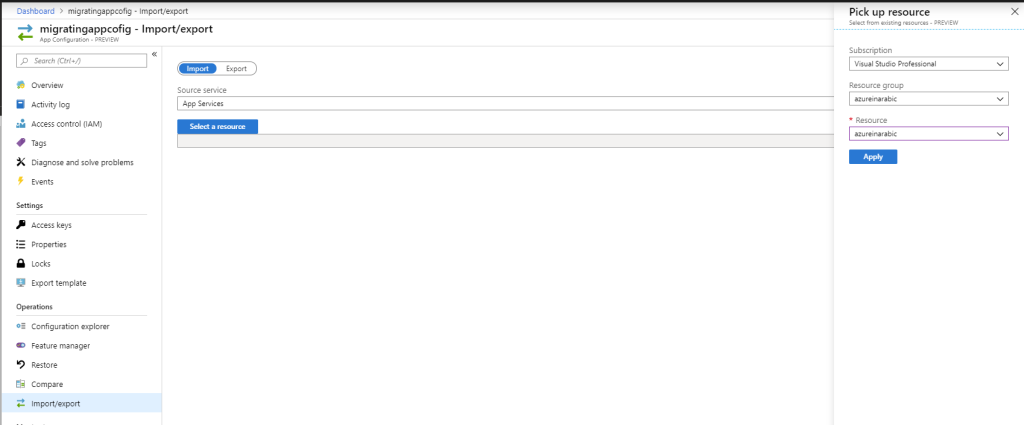

Deployment

Commit and push your changes and wait for the workflow action to finish. If you tried your site now it will not work as the function has no idea about the connection string. To add a new connection string, open your Azure Static Web App, click on Configuration and then click Add. Type CosmosConnectionString as the name and paste the connection string for the Cosmos DB in the value field. Save and refresh your app and now you should see the application displaying the data you saved in Cosmos DB

Source code: https://github.com/haitham-shaddad/ng-todo-list-az-static-webapp